Wav2vec

The pronunciation dictionary is written by human experts, and defined in the IPA. Then, the voice conversion technology will turn the voice into another. The way it works is designed to loosely simulate how humans themselves comprehend speech and respond accordingly. Correct: A mouse click on this button will store the word as correctly repeated. The voice to text converter will generate a transcript of your audio file, which you can then go back and correct any mistakes in. With our base models, you can get an idea of the quality of content in your selected language. For example, RNNs for time series problems have the advantage of internal recurrence and inherent state. However, it does not have any pre built solutions for you to get started. The Speech to Text service is provided via an API application programming interface, which allows you to connect it with your application. It also has a voice recognition module, providing a complete end to end solution for developers. The WER is calculated by adding the word substitutions, insertions, and deletions and then dividing it by the total number of words spoken. Try lowering this value to 0. Deep speech 2: End to end speech recognition in english and mandarin, 2016, pp. However, you need technical expertise to get started – for example, containerized deployment on premise. In either case, the specified file is used as the audio source.

Announcing the launch of Voicegain Whisper Speech Recognition for Gen AI developers

By setting it to false, the command will only be acknowledged once, which is the correct behavior. You can hurt anything, and you can always change the settings back from the defaults. 1 SDK installs 2 more voices, Mike and Mary. Use an HMM acoustic model to convert to sound units, phonemes, or words. These tools are all around us and are only going to become more common over time. Using the SpeechLive Transcription Service by Speech Processing Solutions GmbH, Austria, hereinafter “SPS”, “we” or “us” allows you to have your recorded dictations transcribed in text form. Click on the different category headings to find out more and change our default settings. Try the 1 Recognition and Rewards Tool used by 10,000+ global teams. Your very last step is to close your WebSocket in your handleSubmit function if it’s open. NVDA allows you to explore and navigate the system in several ways, including both normal interaction and review. Best Unzip for Windows – Top 6 Tools for Compressed Files. For example, in Figure 2, the mel spectrogram is generated from a raw audio waveform after applying FFT using the windowing technique. In this tutorial titled ‘Everything You Need to Know About Speech Recognition in Python’, you will learn the basics of speech recognition. This also works with ‘Google Next’, the latest smart speaker from Google. As of my reading this on January 26, 2019, Dragon is no longer available on Amazon for the quoted price of $200. Partner with us to deliver enhanced commercial solutions embedded with AI to better address clients’ needs. Save my name, email, and website in this browser for the next time I comment. Dating back to 1976, computers could only understand slightly more than 1,000 words. This includes ASCII Art which is a pattern of characters, emoticons, leetspeak which uses character substitution, and images representing text. A handful of packages for speech recognition exist on PyPI. Every word you speak is broken up into segments of several tones. When you commend a worker for their accomplishments, you’re conveying to them that they’re a fantastic match for their position as well as for the business. In that case, we narrow the search, since we know that the first must must be “a”. The result confidence is not high enough or there is a background noise. Any spot where tiles were connected by sharing the peripheral capacitor in the analog domain Fig.

Open Source Speech Recognition Tools

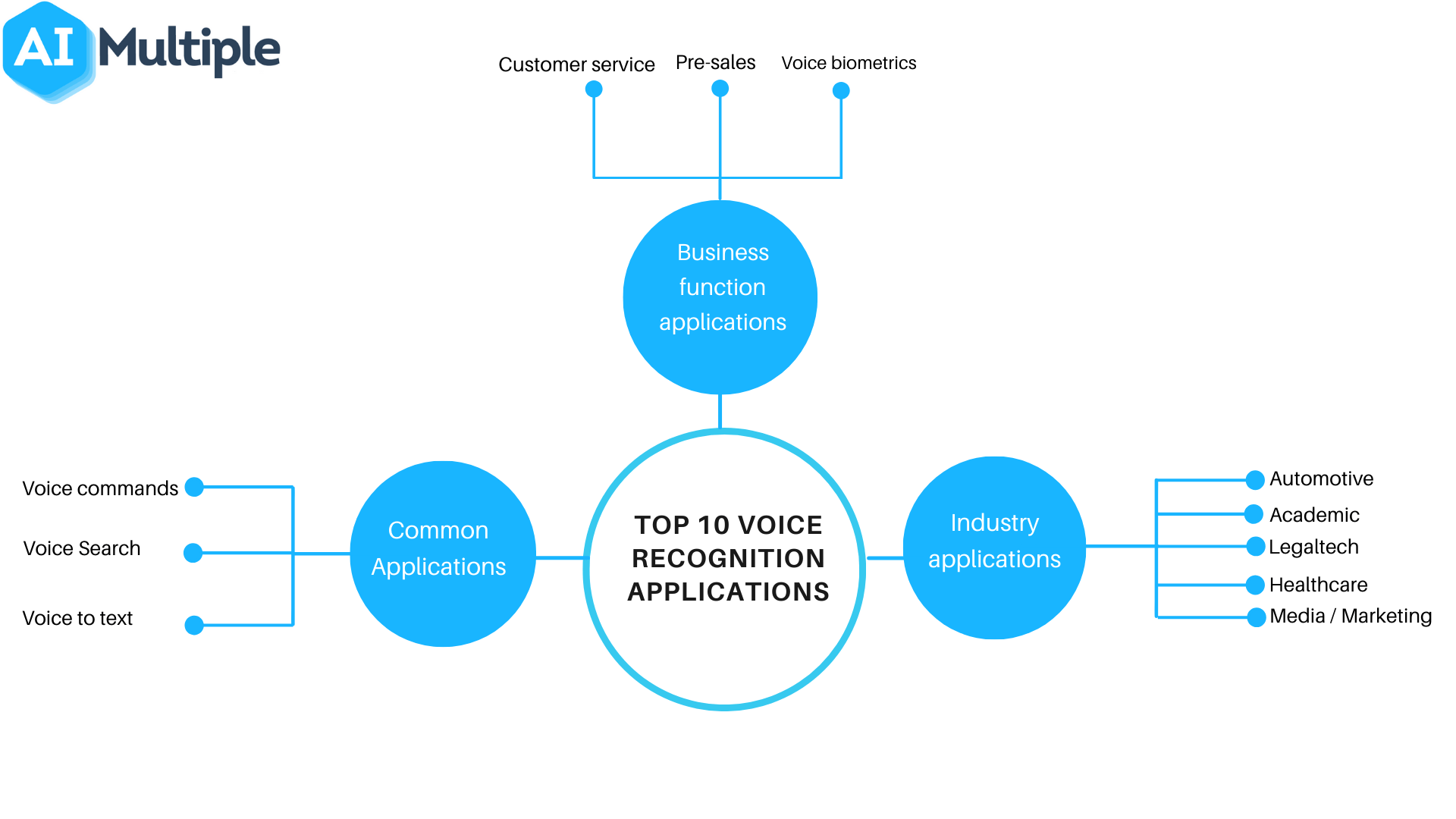

This calculation requires training, since the sound of a phoneme varies from speaker to speaker, and even varies from one utterance to another by the same speaker. Thus with nearly every new device being shipped with Java, it seemed to be the most attractive choice. Sung, in 2016 IEEE International Workshop on Signal Processing Systems SiPS. CTC, LAS, and RNNTs are popular Speech Recognition end to end Deep Learning architectures. T Mobile uses ASR for quick customer resolution, for example. Assistive technologies target narrowly defined populations of users with specific disabilities. These will include smart fridges, headphones, mirrors, and smoke alarms, along with an increased list of third party integrations. For the 2080 Ti, we were limited to a batch size of 1 while for the A5000 we were able to increase the batch size to 3. Open up another interpreter session and create an instance of the recognizer class. If you find that NVDA is reading punctuation in the wrong language for a particular synthesizer or voice, you may wish to turn this off to force NVDA to use its global language setting instead. ArXiv preprint arXiv:1812. Speech recognition software processes speech uttered in a natural language and converts it into readable text with a high degree of accuracy, using artificial intelligence AI, machine learning ML, and natural language NLP techniques. For students trying to record their lessons, audio transcription is the perfect tool. The most important feature of neural network method is the possibility of parallel processing. While employees should not be encouraged to always sacrifice their time, they should be seen and recognized when they put extra effort in. Since customers form the backbone of any organization, appreciating employees for their ability and skills to provide a great customer experience is the primary objective of this category of awards. If you have a really god mobile data pack, then you can also choose the second option – “Auto update the languages at any time. The library reference documents every publicly accessible object in the best speech recognition software for Windows 10 library. As said, speech recognition is a process by which computers can understand human speech and convert it into text. As previously discussed in the finance call center use case, ASR is used in Telecom contact centers to transcribe conversations between customers and contact center agents to analyze them and recommend call center agents in real time. IEEE ICASSP, Munich, pp. Ric Messier, in Collaboration with Cloud Computing, 2014. Learn more about the education system, top universities, entrance tests, course information, and employment opportunities in USA through this course.

Speech recognition algorithms

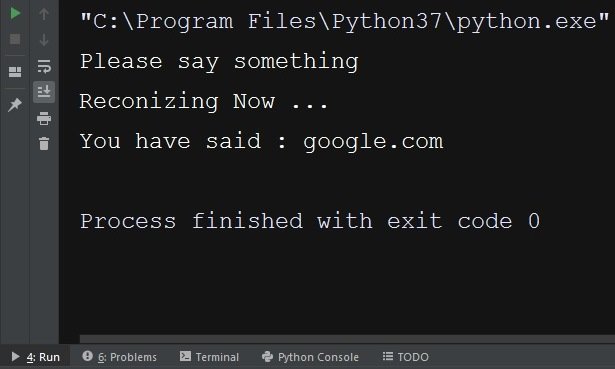

In all current engines. Brazil +55 11 97263 6029. It was not as accurate as using any of the Dragon products, but a little bit of editing would fix that up neatly. Let’s transition from transcribing static audio files to making your project interactive by accepting input from a microphone. With the software being easy to use but having a vast amount of tools, it’s suitable for anyone regardless of their experience. Available On: Google Chrome. New York, NY, United States. It can be used as a dictionary. But remember, the goal of a first draft is never perfection. In fact, most smart devices have some form of transcription software built in. You found our list of the best employee appreciation quotes. Artificial intelligence and machine learning make it possible to continuously improve speech recognition systems by using feedback loops. What’s your 1 takeaway or favorite thing you learned. The speech recognition software we discussed are equipped with powerful AI engines and intelligent algorithms that are increasingly effective with every use. You will need subscription keys to run the samples on your machines, you therefore should follow the instructions on these pages before continuing. Google Cloud Speech API client library. The perfect WER should be as close to 0 as possible. Here are some ways to remove speech services by Google notification, follow these methods one by one and any of these should fix the problem. The Speech Recognition system doesn’t care. A 40nm analog input ADC free compute in memory RRAM macro with pulse width modulation between sub arrays. The success of the API request, any error messages, and the transcribed speech are stored in the success, error and transcription keys of the response dictionary, which is returned by the recognize speech from mic function. That’s a good question and there are systems that do translate features directly to words. We passed this function as a parameter to the useSpeechSynthesis hooks; this way, it will be called whenever a text finishes reading.

Footer

Rear in /usr/share/alsa/alsa. The Best Ideas for Python Automation Projects. This option requires additional test and training data such as human labelled transcripts and related text. All right then, let’s get rid of this bug. Articles from this website are registered at the US Copying or otherwise using registered works without permission, removing this or other. Sivaram GS, Hermansky H 2011 Sparse multilayer perceptron for phoneme recognition. Just log into your account and open a Google Doc. Portions of this content are org contributors. A part of the content that is perceived by users as a single control for a distinct function. Required, but never shown. Not long ago a Gartner study claimed about 30% of our interactions with our devices will be with some sort of voice recognition software. J Geophys Res 768:1905–1915. With a team of professional writers and experienced staff from all over the world, we bring you the best quality open source journalism in the industry. Highly complex and specific AI algorithms can enable speech recognition software to break down human speech literally and for its nuances. What benefits will it have. This kind of silent active failure mode is terrifying.

Democratizing AI: Making Advanced Tech Accessible to All

We have compiled the nine best tools on the market today to help you turn your conversations, lectures, and meetings into effortlessly edited text. This is a significant year for you as you celebrate an important service anniversary with. Let’s get our hands dirty. As an occasional user, if you need a free service, Google Docs Voice Typing is a viable alternative. To specify this in the voice used, we can add rate and pitch properties to the object passed to the speak function. Now if that’s not , then I don’t know what is. Other transcription services rely on computer transcription. Automatic recognition, description, classification and grouping patterns are important parameters in various engineering and scientific disciplines such as biology, psychology, medicine, marketing, computer vision, artificial intelligence and remote sensing. View all posts by Sergey Tkachenko. This project started as a tech demo, but these days it needs more time than I have to keep up with all the PRs and issues. The recognize google method will always return the most likely transcription unless you force it to give you the full response. For more information on the Group Quantization version of the inference notebook, jump to the end of this blog. Similarly, at the end of the recording, you captured “a co,” which is the beginning of the third phrase “a cold dip restores health and zest. Finally, Deepgram produced a whitepaper benchmark report comparing its own capabilities with Whisper. Instead, all the actual computation is handled in Amazon’s cloud servers, which convert the received audio into text using speech recognition technology to understand the command at a machine level. I remember thinking that surely an image of a hamburger does not legally constitute a zero. These include Apple Siri, which came out first in 2011. It’s also available on PCs and Laptops.

Share This Article:

Key features: The key features of Google Speech to Text API include. If it returns an error, you can install following this guide. To do this, see the documentation for recognizer instance. Google Speech Recognition. >The right of the people to be secure in their persons, houses, papers, and effects, against unreasonable searches and seizures, shall not be violated, and no Warrants shall issue, but upon probable cause, supported by Oath or affirmation, and particularly describing the place to be searched, and the persons or things to be seized. Amazon has since released a series of Echo models, each with unique features. The inner keys are both mapped to space. It would have been nice to have a bit more time to research how to improve my features and MFCCs readings but despite those challenges I still managed to have a model with a 75% accuracy. So how do you deal with this. One of our latest innovations in technology applied to language training is speech recognition for learning languages. The decoder leverages acoustic models, a pronunciation dictionary, and language models to determine the appropriate output. Though once the industry standard, accuracy of these classical models had plateaued in recent years, opening the door for new approaches powered by advanced Deep Learning technology that’s also been behind the progress in other fields such as self driving cars. Call Analytics and Insights: ASR technology can be coupled with natural language processing NLP and machine learning techniques to analyze transcriptions and extract valuable insights. Audio files are a little easier to get started with, so let’s take a look at that first.

What AI Music Generators Can Do And How They Do It

You can say whatever you like, and you’ll see it typed on the screen as you go along. The game itself is pretty simple. The best part: they learn from interactions and improve over time. Matthew Zajechowski is an outreach manager for Digital Third Coast. Deciding what you want to say and then saying it, instead of typing it, will seem unnatural at first. 1 or a RNN trained model Section 2. To accommodate this need, Verbit takes the process one step further by using two skilled human transcribers per project to edit and review the ASR’s results. For example, if you say phrases like “weather forecast”, “check my balance” and “I’d like to pay my bills”, the tagged keywords the NLP system focuses on might be “forecast”, “balance” and “bills”. Once the speech recognition is started, there are many event handlers that can be used to retrieve results, and other pieces of surrounding information see the SpeechRecognition events. Regarding the input of the system, this framework computes features on the fly, prior to running over the neural network. In some voice recognition systems, certain frequencies in the audio are emphasized so the device can recognize a voice better. In End to end models, the steps of feature extraction and phoneme prediction are combined. Visit Mozilla Corporation’s not for profit parent, the Mozilla Foundation. A Using the original MLPerf weights, input data is first shifted to zero mean and normalized to a common maximum input amplitude. Switch back and forth between two visual states in a way that is meant to draw attention. Designers must approach their designs with voice first interactions adapted to how end users would interact through speech. If you don’t mind spending some more time perfecting your audio to text files, what you can do is use our online transcription software. Amazon also offers the option to personalize your devices to respond to your voice. HMM decoding uses the Viterbi algorithm and it represents the speech recognition task itself. Then we can import our custom useVoice React hook. InterimResults = true, then your event handler is going to give you a stream of results back, until you stop talking. It can significantly speed up the writing process, at least after you’ve gotten used to how it works, and is a great alternative for authors who choose not to type, or who can’t type due to complications from something like carpal tunnel.

Contact

The best speech recognition software makes your voice as productive as your hands. After selection of high yield dies, the wafer was diced and packaged into testable modules at IBM Bromont, as shown in Extended Data Fig. Speech recognition technology requires that the user have a relatively consistent speaking voice and moderately good reading skills in order to recognize errors and correct the program’s text output. If you use a wireless headset for dictating, you might as well forget using a bluetooth keyboard, as the delay on the keyboard from the moment speech recognition comes on are anything between a few milliseconds up to 5 seconds on my computer. Finally, a punctuation and capitalization model is used to improve the text quality for better readability. VoiceOverMaker simplifies the creation of professional voice overs, making it accessible even if you are not a professional. Training simply involves reading back to the computer a number of sentences that appear on the screen. See More: Top 21 Artificial Intelligence Software, Tools, and Platforms. The mobile app Dragon Anywhere costs $14. 11 and recent PyTorch versions. Companies, like IBM, are making inroads in several areas, the better to improve human and machine interaction. In addition, the Web page as a whole continues to meet the conformance requirements under each of the following conditions. You can find freely available recordings of these phrases on the Open Speech Repository website. According to Waibel, fast, high accuracy speech recognition is an essential step for further downstream processing. How to cite this library APA style. Py”, line 48, in load data emotion=emotions] IndexError: list index out of range. Appreciating your employees doesn’t take much effort. What benefits will it have. Here is a listing of such, grouped in various useful ways. Dragon Professional Individual can transcribe audio files. Some find that products like this are no better than the free tools already built into your operating system. There are many different ways to train automatic speech recognition ASR systems, each with advantages and disadvantages.

Attempt to cancel speech for expired focus events

The earliest advances in speech recognition focused mainly on the creation of vowel sounds, as the basis of a system that might also learn to interpret phonemes the building blocks of speech from nearby interlocutors. I have everything set properly but yet when I want to use it I can’t get it to work in setup and in tests it worked fine. For example, the financial and banking industries are increasingly implementing voice biometrics for security purposes. We have several options from which you can choose. And it is for this very reason that we propose you to discover speech recognition in detail through this article. The first is the use of a dense and efficient circuit switched 2D mesh to exchange massively parallel vectors of neuron activation data over short distances. You can play the files directly if working locally. Voice based authentication adds a viable level of security. You have been here for five years and have shaped this team and your coworkers. Thanks to permanent connections to the internet and data centers, the complex mathematical models that power voice recognition technology are able to sift through huge amounts of data that companies have spent years compiling in order to learn and recognize different speech patterns. Dragon cloud solutions. If you are working on x 86 based Linux, macOS or Windows, you should be able to work with FLAC files without a problem. We are based in Boston, MA and have been operating since 2008. It breaks the audio data down into sounds, and it analyzes the sounds using algorithms to find the most probable word that fits that audio. We recommend using a headset with a microphone since it tends to give the best results. Now that you’ve seen some benefits of appreciating the employees, we’ll now look at some employee appreciation speech samples.

Product

We will use the useSpeechRecognition hook and the SpeechRecognition object. Voice and breath stamina should be considered when this is evaluated as a potential input method. To hack on this library, first make sure you have all the requirements listed in the “Requirements” section. If you have not already shared your research data publicly, peer reviewers may request to see your datasets, to support validation of the results in your article. This is harder than it sounds – every human being has their own unique inflexion, tonality, and manner of speaking, almost like a fingerprint. Do not lose any piece of information ever. This guide will show you how to. One piece of advice: use distribution packages. With this, when we go to our app and click on the settings icon, we will see the selection and the two range inputs. In college or high school alumni, awards are given and the person who will receive it should thank the alumni or the administration for granting the award. That mapping, from the sound representation to the phonetic representation, is the task of our acoustic model. Here are the top 10 speech recognition software in 2022.

Guides

Best DLL Repair Tool for Windows – 10 Powerful Options. In 2008 fourth international conference on natural computation Vol. After the initial setup, we recommend training Speech Recognition to improve its accuracy and to prevent the “What was that. It supports over 125 languages and a collection of pre trained models for specific domains. Speech emotion recognition SER is a hot topic in speech signal processing. In the case of disabling the app, some of the other apps like Google Assistant may fail to operate. The system uses a lexicon and an n gram language model. Thankyou for sharing such nice information. Windows Speech Recognition software. I can’t thank you enough for everything you do for this company and for me. A call tracking company doubled its Conversational Intelligence customers by integrating AI powered ASR into its platform and building powerful Generative AI products on top of the transcription data. Giving objective and specific feedback gives employers a chance to highlight the areas where their staff are excelling. 2d, we repeatedly multi cast 512 input PWM durations from the southwest ILP to all six OLPs at the same time. Inside the function, we are going to listen to audio using the listen method from the model state then log the spectrogram result of the speech. AI in speech recognition is a powerful application of artificial intelligence that involves the conversion of spoken language into written text. Nividia OpenSeq2Seq Jasper LibriSpeech implementation 2020. Mozilla DeepSpeech is an open source deep learning based speech recognition engine developed by Mozilla. Well, they’re only fair, to be honest. Figure 6b shows that another 25% improvement in TOPS/W from 12. At , we realize that our employees are our greatest asset, and we are delighted to honor your dedicated service and commitment to this organization. Other aspects, such as the speed and volume of the audio, are adjusted to better match the references audio samples that the voice recognition system uses to compare. Specifically, it is a copy of xACT 2. What do they want to know. To recognize speech in a different language, set the language keyword argument of the recognize method to a string corresponding to the desired language.